A few years ago, shipping software was the hard part.

Today, shipping something that looks like software is trivial.

You can use tools like Lovable to describe an idea in natural language, wire up a few APIs, generate a polished UI, and deploy a working demo before the weekend is over. Landing pages sparkle. Dashboards animate. The product tour feels complete. From the outside, it looks like progress.

But for founders, investors, and operators who live in the details, a new and uncomfortable question keeps popping up.

How do you tell what is real anymore.

Not what runs. Not what demos well. But what is actually built to last.

This is the credibility problem quietly forming underneath the current wave of AI driven startup creation.

When speed stops being a signal

Speed used to mean something.

If a small team shipped a meaningful product quickly, it suggested focus, technical strength, and good execution. Today, speed is no longer scarce. AI removed friction from the visible parts of product building, but not from the invisible ones.

Architecture decisions. Data models that survive change. Edge cases. Security boundaries. Operational thinking.

These things do not show up in a demo. They show up months later, when users push the system in ways the prompt never anticipated.

The result is a market flooded with tools that look finished but are structurally shallow. For outsiders, especially investors and potential partners, this creates a serious signal problem.

Two products can look identical on the surface. One might be the result of a year of careful iteration by a dedicated team. The other might be the output of a few intense weekends of prompting and glue code.

From the outside, they are indistinguishable.

Due diligence in a world that moves too fast

Traditional due diligence assumes time.

Time to inspect codebases.

Time to talk to customers.

Time to understand team dynamics and technical depth.

Modern fundraising cycles do not always allow for that. Early stage decisions are often made under pressure, based on partial information, pattern recognition, and perceived momentum.

In that environment, surface level quality becomes dangerous. Flashy UIs and confident narratives can hide fragile foundations. Investors know this. So do enterprise buyers. So do experienced operators.

This leads to a defensive posture. Skepticism increases. Teams with genuinely deep products are sometimes met with raised eyebrows instead of enthusiasm.

It looks impressive, but wasn't this just quickly built by AI?

That question alone can slow down conversations, deals, and trust.

The unfair downside for serious teams

There is a quiet irony in all of this.

Teams that actually do the hard work often suffer the most from the hype.

When expectations are set by tools that promise instant software, everything starts to feel cheap. Complexity gets underestimated. Timelines get questioned. Effort becomes invisible.

Founders hear it in subtle ways.

Why did this take so long. Could this not have been generated faster. Is this really that hard.

Anyone who has built a real system knows the answer. The difficulty is not writing code. The difficulty is designing behavior that holds up when reality intervenes.

AI does not remove that difficulty. It just hides it until later.

Why appearances are no longer enough

We are entering a phase where looking legitimate is easy.

What becomes scarce is provable legitimacy.

Not marketing claims. Not founder confidence. But objective signals that show a product has depth, history, and disciplined execution behind it.

Think about how other industries solved similar problems.

Open source communities rely on contribution history and peer review.

Manufacturing relies on certifications and audits.

Finance relies on standardized reporting.

Software, especially young software companies, lacks a shared way to demonstrate substance beyond screenshots and pitch decks.

That gap is becoming more painful as AI accelerates surface level production.

The hidden layer that actually matters

What separates a serious product from a weekend experiment usually lives in the workflow, not the UI.

How decisions are documented.

How changes are reviewed.

How incidents are handled.

How priorities evolve over time.

These signals exist, but they are fragmented across tools teams already use. GitHub tells part of the story. Linear or Jira tells another. Slack and calendar context fills in the rest. On their own, none of them provide a coherent picture.

Together, they can.

The problem is not lack of data. It is lack of synthesis.

A coming shift in how credibility is established

It is not hard to imagine where this goes.

As the number of AI generated products keeps rising, the market will look for new filters. New ways to separate serious efforts from disposable ones. Not based on taste or hype, but on evidence.

Evidence of sustained work.

Evidence of intentional decision making.

Evidence of a team that shows up consistently over time.

In that future, credibility becomes something you can demonstrate, not just claim.

Founders who prepare for that shift early will have an advantage. They will not need to over explain why something took a year to build. The work will speak for itself.

Those who ignore it may find themselves constantly fighting skepticism, even when their product is genuinely strong.

Preparing without slowing down

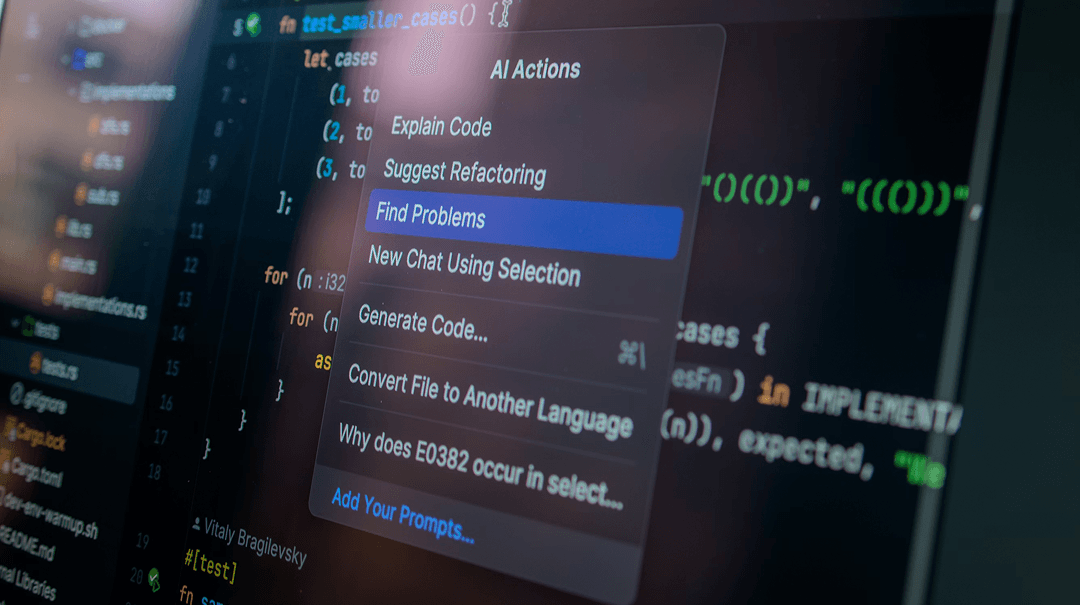

None of this means rejecting AI or modern tooling.

Used well, AI accelerates the right things. It reduces busywork. It helps teams explore ideas faster. It integrates beautifully with the tools engineers already rely on.

The key is intention.

When AI supports a disciplined process, it compounds quality. When it replaces thinking, it creates debt.

Teams that treat their internal workflow as part of the product, not as an afterthought, naturally build credibility over time. Their decisions leave traces. Their progress forms a narrative. Their systems become explainable.

Why this matters now

This is not a future problem.

The credibility gap is already here. It shows up in investor meetings that stall. In procurement cycles that drag on. In users who hesitate to trust young tools with serious data.

As AI keeps lowering the cost of appearing finished, the cost of being trusted goes up.

That is the curve serious founders should pay attention to.

The quiet role One Horizon can play

This is where platforms like One Horizon come in, not as another productivity hack, but as infrastructure for trust that you don't even need to maintain yourself.

By connecting the tools teams already use and turning day to day execution into a coherent, verifiable story, it becomes easier to show that a product was not thrown together over a weekend.

Not through claims, but through context.

As the market shifts, having that foundation in place will matter more than most founders expect.

The earlier you prepare, the less you will need to convince later.

Sign up today